Can a Machine Learning System Outperform Humans in Emotion Recognition? - Valentina Zhang

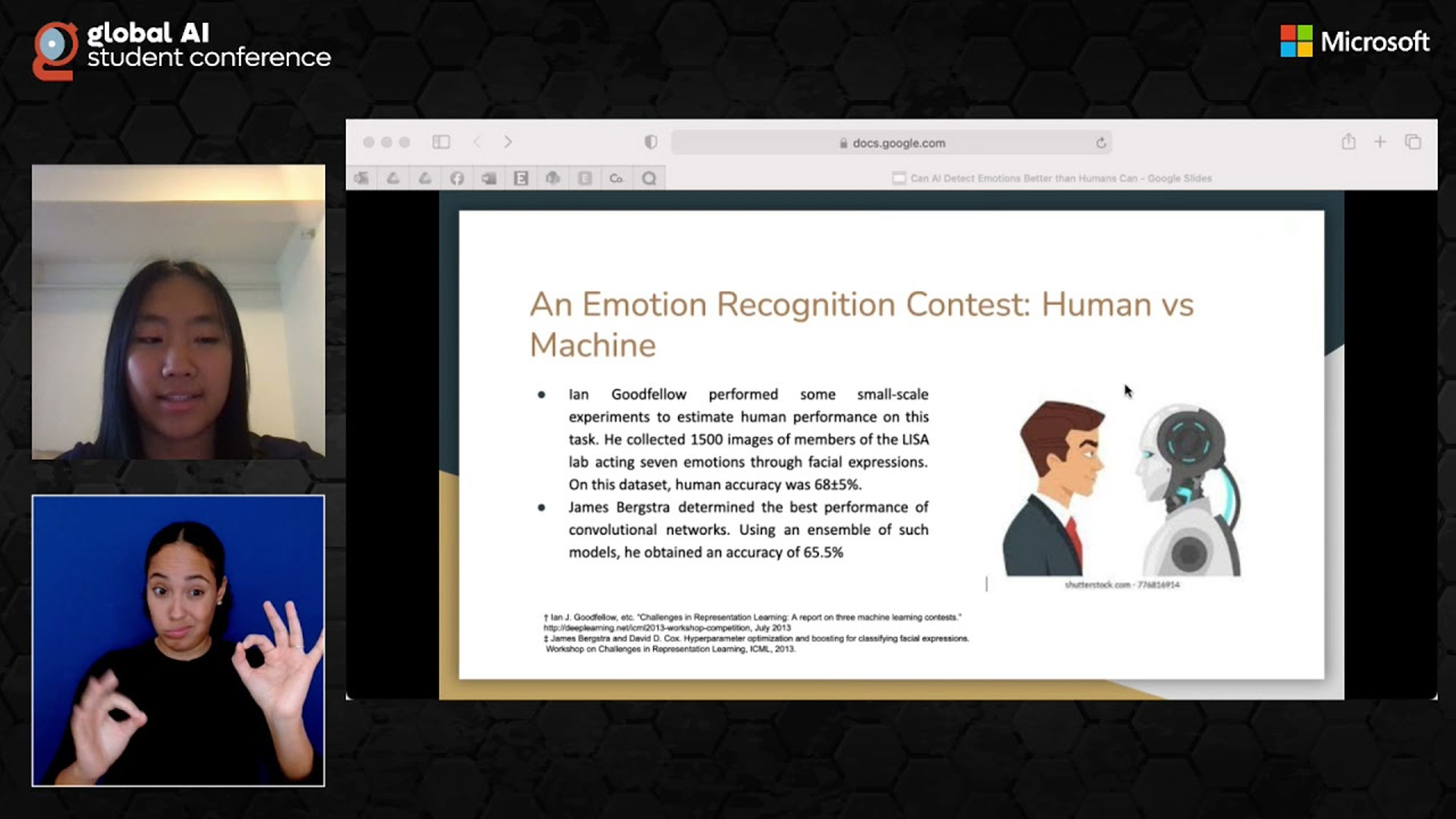

In 1961, when Carl Sagan learned that a NASA spacecraft to Venus would carry no cameras, because the other scientists considered cameras to be excess weight, he insisted upon and campaigned for the chance to see the alien planet up close. In machine learning (ML)-based facial emotion recognition, is landmark-based training sufficient or does image-based training still have irreplaceable value? I believe that different facial representations can provide different, sometimes complementing views of emotions. When employed collectively, they enable a more reliable group emotion recognition. In this talk, I will first introduce my study of emotion recognition using image-trained ML model vs landmark-trained ML model in the context of a popular deepfake video. My experiment indicates that while the landmark-trained model performs better with articulated facial feature changes, the image-trained model outperforms on subtle facial expressions. Armed with these findings, we will take a tour of my adaptive group emotion recognition system for small discussion groups. After sharing some results using a collection of group discussion videos from YouTube, I will wrap up my talk by showing the benefits of an adaptive ML-based approach that automatically learns from both image and landmark facial representations and goes on to model group emotion dynamics, and augment individual emotion recognition.

Valentina Zhang Phillips Academy Exeter

Valentina Zhang is a student at Phillips Exeter Academy. She is an alumna of MIT BWSI studying machine learning in medicine, and an intern at a start-up company building ML-based solutions. Besides technology and neuroscience, Valentina's interests also include biology and community service. She helped digitize a 250-year-old library and her tomatoes won the Best Tasting Tomatoes award hosted by Boston Public Market.