Global AI Weekly

Issue number: 5 | Monday, May 1, 2023

Highlights

How GPT models work

During training, the model is exposed to a lot of text, and acquires the ability to predict good probability distributions, given a sequence of input tokens. That’s because the model doesn’t actually produce a single predicted token; instead it returns a probability distribution over all the possible tokens.

bea.stollnitz.com

New ways to manage your data in ChatGPT

ChatGPT users can now turn off chat history, allowing you to choose which conversations can be used to train our models.

openai.comVideo

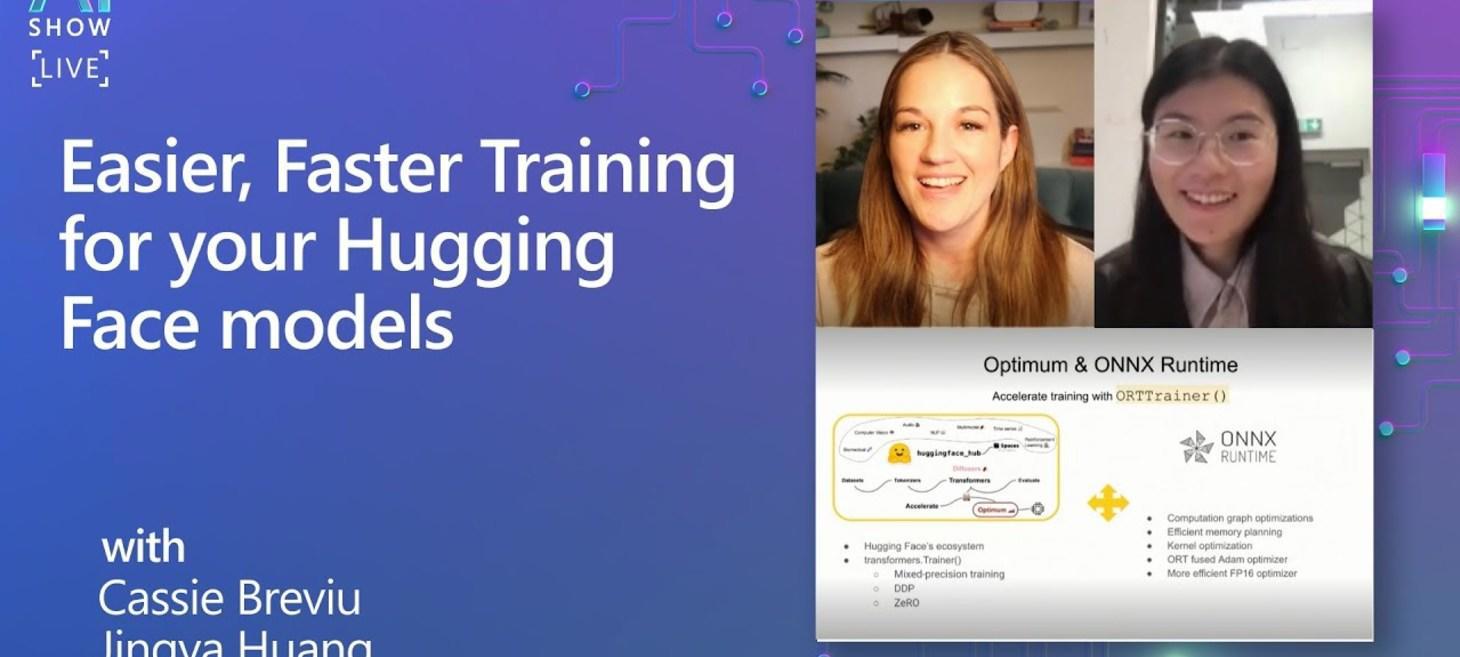

Easier, Faster Training for your Hugging Face models

Jingya Huang joins Cassie Breviu to talk about how to use Optimum + ONNX Runtime to accelerate the training of Hugging Face models. In the demo, we will fine-tune the DeBERTa model with Trainer and show the gain by leveraging the ONNX Runtime backend.

youtube.comArticles

What is Auto-GPT and why does it matter?

Silicon Valley’s quest to automate everything is unceasing, which explains its latest obsession: Auto-GPT. In essence, Auto-GPT uses the versatility of OpenAI’s latest AI models to interact with software and services online, allowing it to “autonomously” perform tasks like X and Y. But as we are learning with large language models, this capability seems to […] What is Auto-GPT and why does it matter? by Kyle Wiggers originally published on TechCrunch

techcrunch.comIntroducing Hidet: A Deep Learning Compiler for Efficient Model Serving

Hidet is a powerful deep learning compiler that simplifies the process of implementing high-performing deep learning operators on modern accelerators (e.g., NVIDIA GPUs). With the new feature of torch.compile(...) in PyTorch 2.0, integrating a novel compiler into PyTorch is easier than ever - Hidet now can be used as a torch.compile(...) backend to accelerate PyTorch models, making it an attractive option for PyTorch users who want to improve the inference performance of their models, especially for those who also need to implement extremely optimized custom operators.

pytorch.orgcuCatch: A Debugging Tool for Efficiently Catching Memory Safety Violations in CUDA Applications

cuCatch: A Debugging Tool for Efficiently Catching Memory Safety Violations in CUDA Applications CUDA, OpenCL, and OpenACC are the primary means of writing general-purpose software for NVIDIA GPUs, all of which are subject to the same well-documented memory safety vulnerabilities currently plaguing software written in C and C++.

research.nvidia.comHugging Face releases its own version of ChatGPT

Hugging Face, the AI startup backed by tens of millions in venture capital, has released an open source alternative to OpenAI’s viral AI-powered chabot, ChatGPT, dubbed HuggingChat. Available to test through a web interface and to integrate with existing apps and services via Hugging Face’s API, HuggingChat can handle many of the tasks ChatGPT can, […] Hugging Face releases its own version of ChatGPT by Kyle Wiggers originally published on TechCrunch

techcrunch.comMEPs seal the deal on Artificial Intelligence Act

Following months of intense negotiations, members of the European Parliament (MEPs) have bridged their difference and reached a provisional political deal on the world’s first Artificial Intelligence rulebook.

euractiv.com