Global AI Weekly

Issue number: 57 | Tuesday, June 18, 2024

Highlights

From Silicon Valley to Silicon Savannah: climate expert Patrick Verkooijen on why this is Africa’s century

The University of Nairobi’s new chancellor says the continent has vast potential – but to realise the promise of AI and green jobs, rich countries must honour their commitments Africa has all the potential to meet pressing climate challenges with innovative solutions, according to one of the world’s renowned environmentalists. With its vast natural capital and youthful population, “this is Africa’s century,” according to Prof Patrick Verkooijen, chief executive of the Global Center on Adaptation (GCA), and the new chancellor of the University of Nairobi.

theguardian.com

This Week in AI: Apple won’t say how the sausage gets made

Hiya, folks, and welcome to TechCrunch’s regular AI newsletter. This week in AI, Apple stole the spotlight. At the company’s Worldwide Developers Conference (WWDC) in Cupertino, Apple unveiled Apple Intelligence, its long-awaited, ecosystem-wide push into generative AI.

techcrunch.com

Introducing AutoGen Studio: A low-code interface for building multi-agent workflows

AutoGen Studio, built on Microsoft’s flexible open-source AutoGen framework for orchestrating AI agents, provides an intuitive user-friendly interface that enables developers to rapidly build, test, customize, and share multi-agent AI solutions—with little or no coding.

microsoft.com

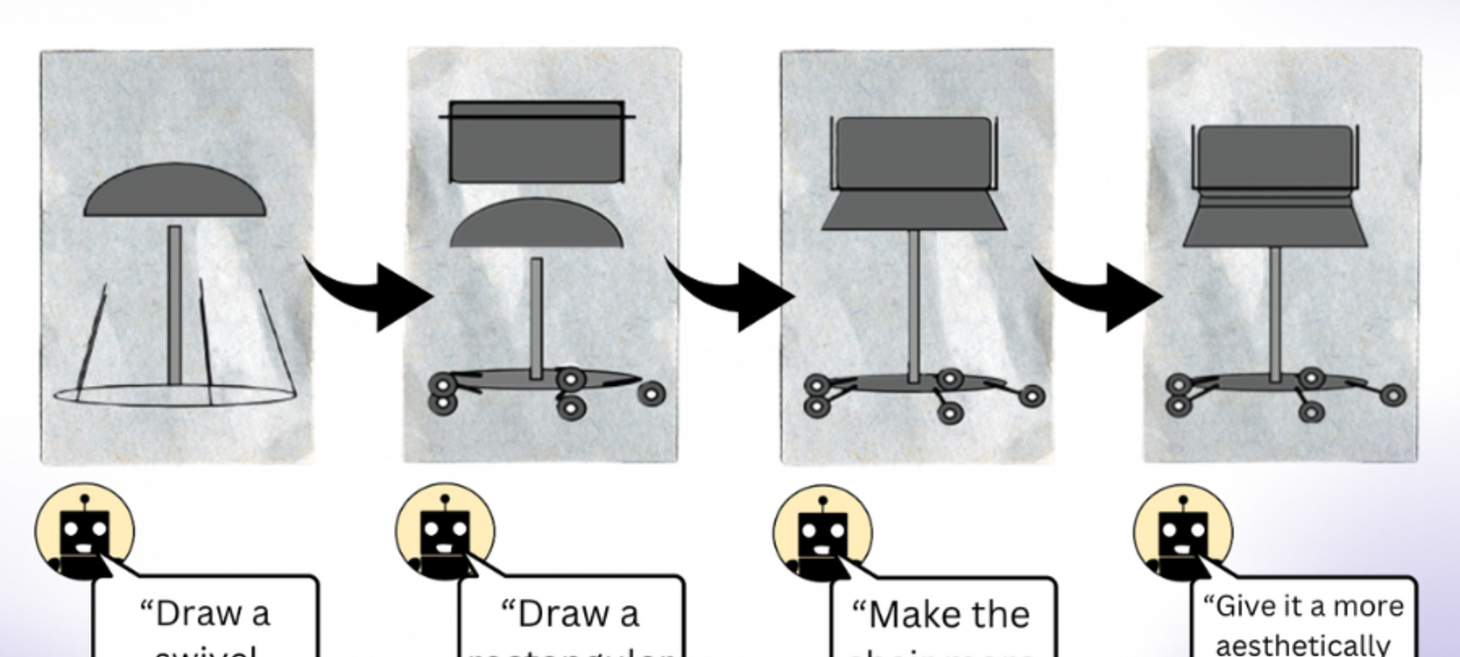

Understanding the visual knowledge of language models

LLMs trained primarily on text can generate complex visual concepts through code with self-correction. Researchers used these illustrations to train an image-free computer vision system to recognize real photos.

news.mit.eduResearch

Better interpretable neural networks with Kolmogorov networks

As more governments are considering regulating AI, it is important that we gain a better understanding of how ML solutions work internally. Using neural networks in your solution makes it harder to see what happens. Some researchers are finding ways to make neural networks easier to interpret. This paper for example, uses a new way to train neural networks to make them easier to interpret. Well worth a read!

arxiv.orgVideo

AI Show LIVE | Prompty: Choose Your Own Adventure

Join Seth as he starts a new project in Prompty with audience participation!

youtube.comArticles

Nemotron-4 340B

We release the Nemotron-4 340B model family, including Nemotron-4-340B-Base, Nemotron-4-340B-Instruct, and Nemotron-4-340B-Reward. Our models are open access under the NVIDIA Open Model License Agreement, a permissive model license that allows the distribution, modification, and use of the models and their outputs.

research.nvidia.com

ChatGPT is coming to your iPhone. These are the four reasons why it’s happening far too early | Chris Stokel-Walker

The AI’s errors can still be comical and catastrophic. Do we really want this technology to be in so many pockets? Tech watchers and nerds like me get excited by tools such as ChatGPT. They look set to improve our lives in many ways – and hopefully augment our jobs rather than replace them.

theguardian.comHow researchers cracked an 11-year-old password to a crypto wallet

If you used the RoboForm password manager to generate a password prior to their 2015 bug fix that password was generated using a pseudo-random number generator based on your device’s current time—which means an attacker may be able to brute-force the password from a shorter list of options if they can derive the rough date when it was created.

simonwillison.net

Reducing Model Checkpointing Times by Over 10x with PyTorch Distributed Asynchronous Checkpointing

Summary: With PyTorch distributed’s new asynchronous checkpointing feature, developed with feedback from IBM, we show how IBM Research Team is able to implement and reduce effective checkpointing time by a factor of 10-20x. Example: 7B model ‘down time’ for a checkpoint goes from an average of 148.8 seconds to 6.3 seconds, or 23.62x faster.

pytorch.org

A discussion of discussions on AI Bias

There've been regular viral stories about ML/AI bias with LLMs and generative AI for the past couple years. One thing I find interesting about discussions of bias is how different the reaction is in the LLM and generative AI case when compared to "classical" bugs in cases where there's a clear bug. In particular, if you look at forums or other discussions with lay people, people frequently deny that a model which produces output that's sort of the opposite of what the user asked for is even a bug. For example, a year ago, an Asian MIT grad student asked Playground AI (PAI) to "Give the girl from the original photo a professional linkedin profile photo" and PAI converted her face to a white face with blue eyes.

danluu.comThis humanoid robot can drive cars — sort of

Out of an abundance of caution, the car took two minutes to turn a corner.

techcrunch.com

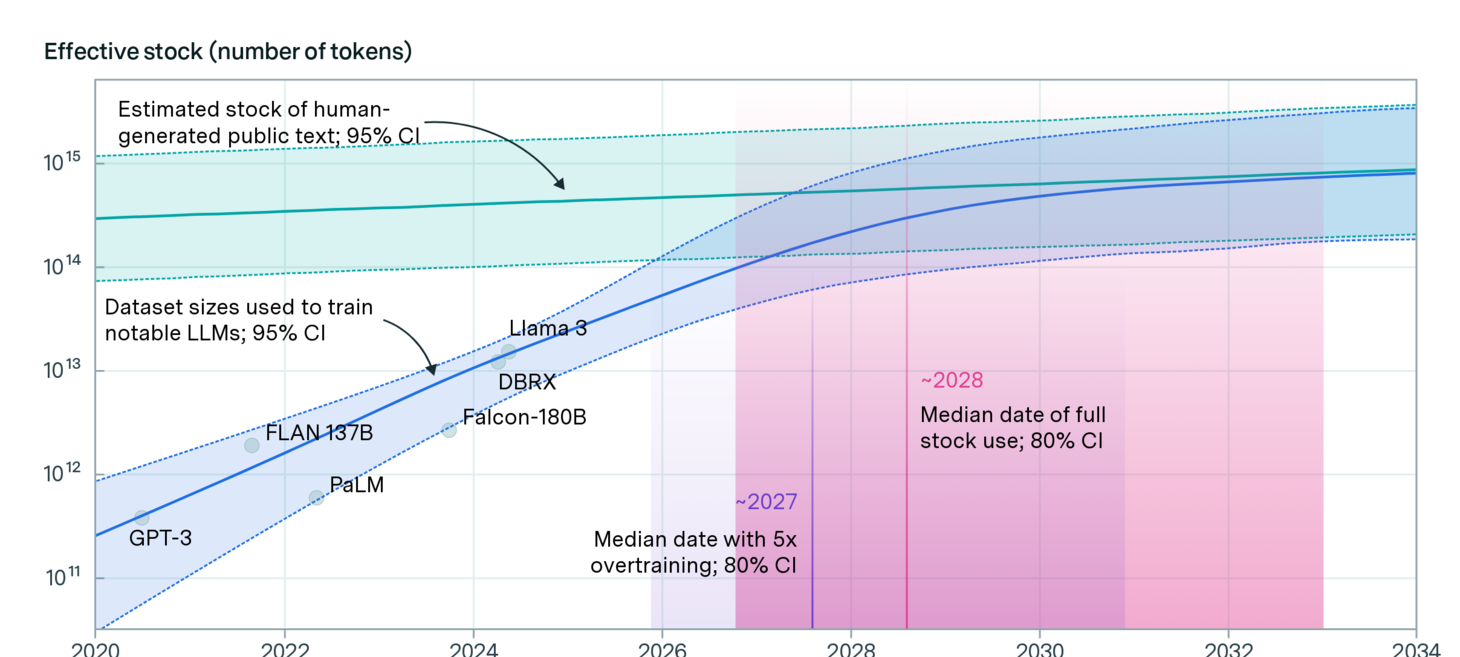

Will We Run Out of Data? Limits of LLM Scaling Based on Human-Generated Data

We estimate the stock of human-generated public text at around 300 trillion tokens. If trends continue, language models will fully utilize this stock between 2026 and 2032, or even earlier if intensely overtrained.

epochai.orgCode

Welcome to Microsoft Phi-3 Cookbook

Phi-3, a family of open AI models developed by Microsoft. Phi-3 models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks.

github.com