Global AI Weekly

Issue number: 78 | Tuesday, December 3, 2024

Highlights

Why Small Language Models Are The Next Big Thing In AI

With Elon Musk’s xAI raising an $5 billion and Amazon investing an $4 billion in OpenAI rival Anthropic — artificial intelligence enters the holiday season with a competitive roar.

forbes.com

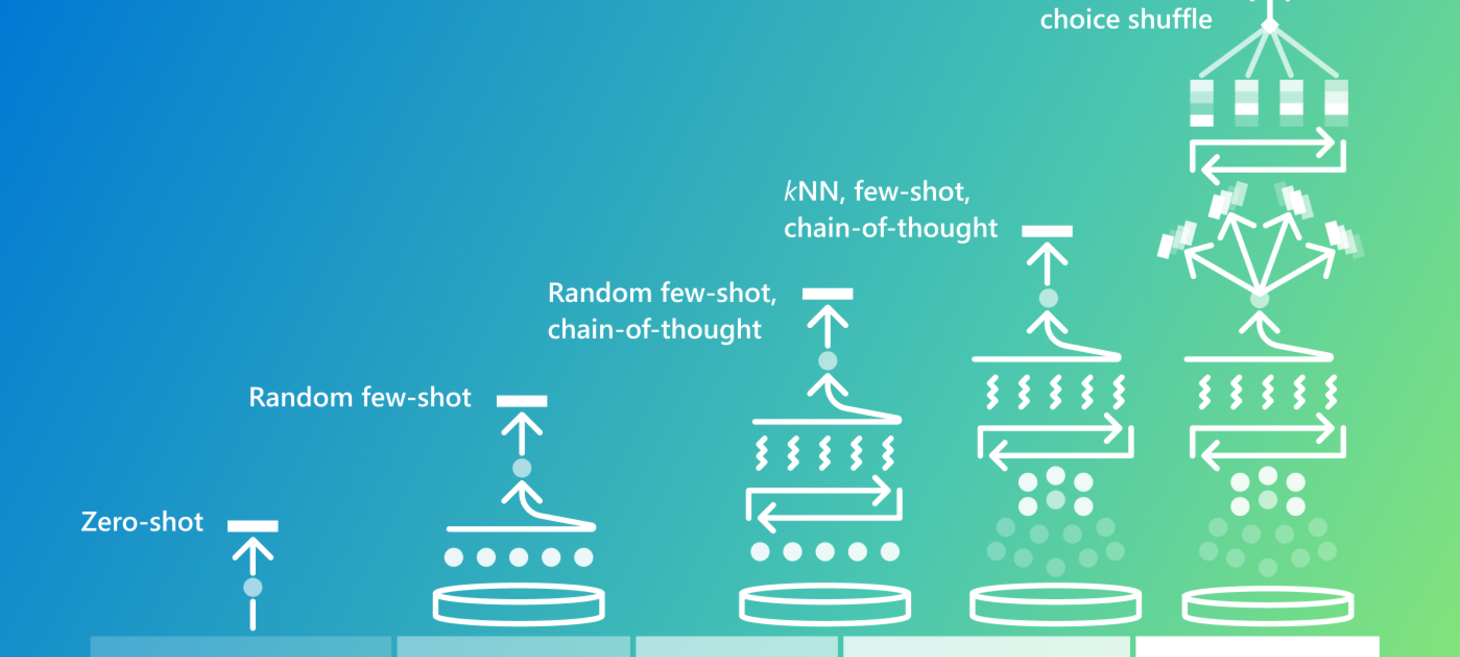

Advances in run-time strategies for next-generation foundation models

Groundbreaking advancements in frontier language models are progressing rapidly, paving the way for boosts in accuracy and reliability of generalist models, making them highly effective in specialized domains.

microsoft.com

If AI can provide a better diagnosis than a doctor, what’s the prognosis for medics? | John Naughton

Studies in which ChatGPT outperformed scientists and GPs raise troubling questions for the future of professional work AI means too many (different) things to too many people. We need better ways of talking – and thinking – about it. Cue, Drew Breunig, a gifted geek and cultural anthropologist, who has come up with a neat categorisation of the technology into three use cases: gods, interns and cogs.

theguardian.com

Ai2 releases new language models competitive with Meta’s Llama

There’s a new AI model family on the block, and it’s one of the few that can be reproduced from scratch. On Tuesday, Ai2, the nonprofit AI research organization founded by the late Microsoft co-founder Paul Allen, released OLMo 2, the second family of models in its OLMo series. (OLMo is short for “open language […]

techcrunch.comArticles

Making News Recommendations Explainable with Large Language Models

In our latest (offline) experiment, we investigated whether Large Language Models (LLMs) could effectively predict which articles a reader would be interested in, based on their reading history.

towardsdatascience.comSimon Willison: The Future of Open Source and AI

I sat down a few weeks ago to record this conversation with Logan Kilpatrick and Nolan Fortman for their podcast Around the Prompt. The episode is available on YouTube and Apple Podcasts and other platforms.

simonwillison.net

New Responsible AI Features | Microsoft Community Hub

We’ve had an amazing week at Microsoft Ignite! With over 80 products and features announcements, including the launch of Azure AI Foundry (formerly, Azure AI...

techcommunity.microsoft.comAI expert Marietje Schaake: ‘The way we think about technology is shaped by the tech companies themselves’

The Dutch policy director and former MEP on the unprecedented reach of big tech, the need for confident governments, and why the election of Trump changes everything

theguardian.com

Smaller is smarter

I’m attempting to translate these figures into something more tangible… though this doesn’t include the training costs, which are estimated to involve around 16,000 GPUs at an approximate cost of $60 million USD (excluding hardware costs) — a significant investment from Meta — in a process that took around 80 days. This consumption resulted in the release of approximately 5,000 tons of CO₂-equivalent greenhouse gases ( based on the European average ),, although this figure can easily double depending on the country where the model was trained.

towardsdatascience.com

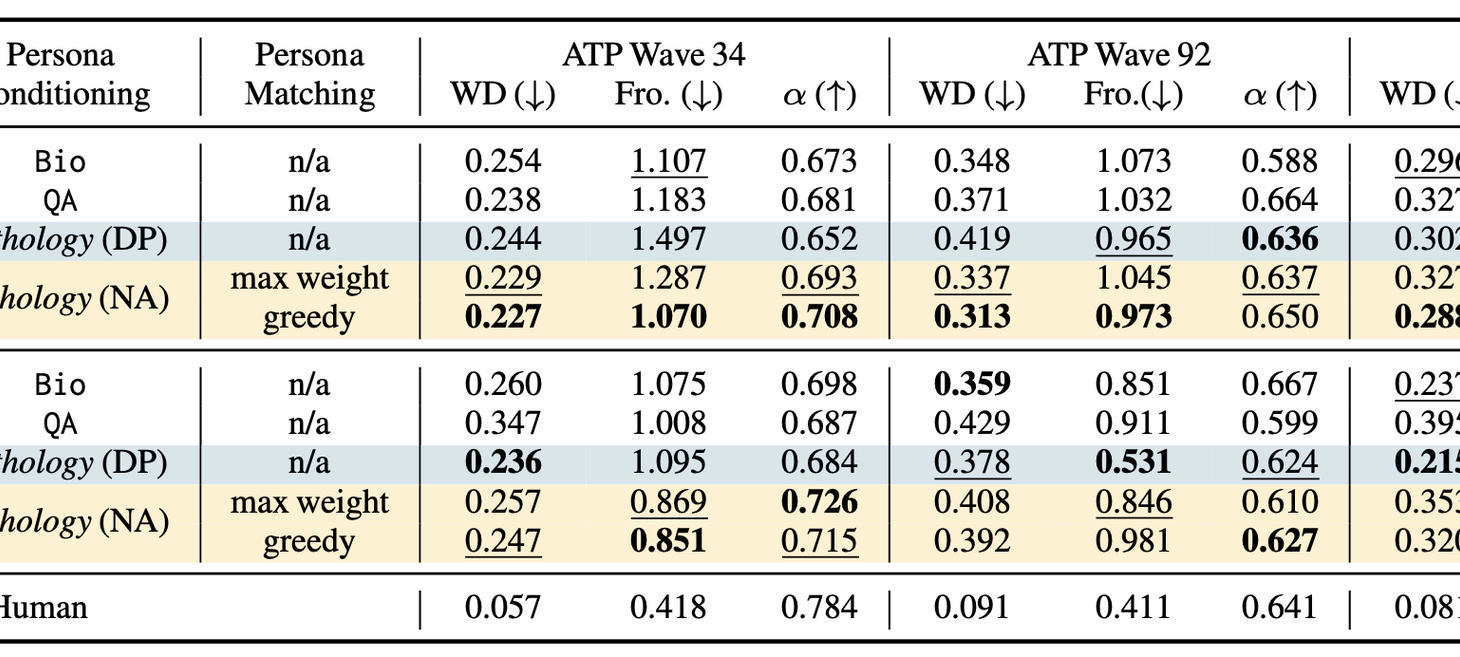

Virtual Personas for Language Models via an Anthology of Backstories

We introduce Anthology, a method for conditioning LLMs to representative, consistent, and diverse virtual personas by generating and utilizing naturalistic backstories with rich details of individual values and experience.

bair.berkeley.edu