Global AI Weekly

Issue number: 83 | Tuesday, February 4, 2025

Highlights

Sora rival Pika just dropped a new video AI model

AI video creator Pika Labs is keen to stand out from possible competitors like OpenAI and Sora, and its newest model adds some real power to that effort. Pika 2.1 added a whole suite of new and upgraded features just a little over a month after Pika 2.0 dropped.

techradar.com

NHS to launch world’s biggest trial of AI breast cancer diagnosis

If successful, the scheme could speed up testing and reduce radiologists’ workload by around half The NHS is launching the world’s biggest trial of artificial intelligence to detect breast cancer, which could lead to faster diagnosis of the disease. AI will be deployed to analyse two-thirds of at least 700,000 mammograms done in England over the next few years to see if it is as accurate and reliable at reading scans as a radiologist.

theguardian.com

OpenAI’s new trademark application hints at humanoid robots, smart jewelry, and more

Last week OpenAI filed a new application to trademark products associated with its brand. Normally, this wouldn’t be newsworthy. Companies file for trademarks all the time. But in the application, OpenAI hints at new product lines both nearer-term and of a more speculative nature.

techcrunch.comResearch

The Price of Intelligence - Three risks inherent in LLMs

LLMs (large language models) have experienced explosive growth in capability, proliferation, and adoption across both consumer and enterprise domains. These models, which have demonstrated remarkable performance in tasks ranging from natural-language understanding to code generation, have become a focal point of artificial intelligence research and applications. In the rush to integrate these powerful tools into the technological ecosystem, however, it is crucial to understand their fundamental behaviors and the implications of their widespread adoption.

queue.acm.org

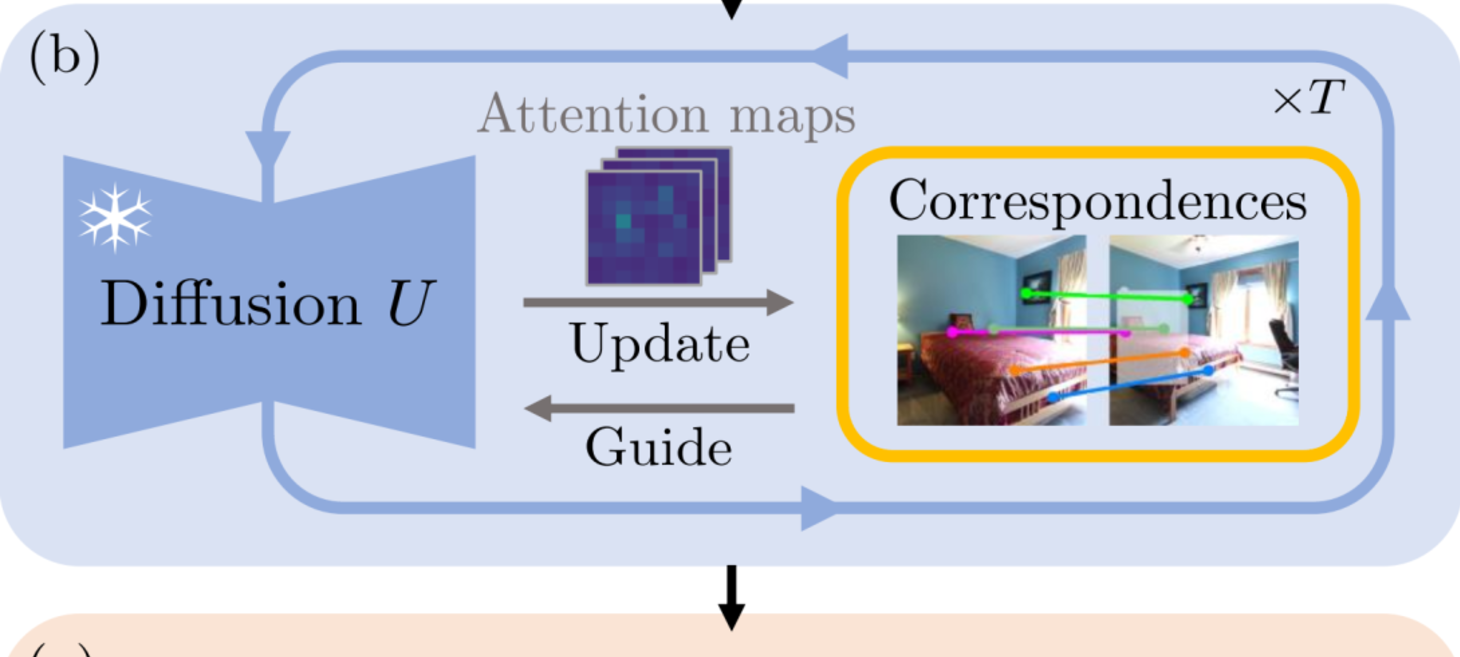

CorrFill: Enhancing Faithfulness in Reference-based Inpainting with Correspondence Guidance in Diffusion Models

In the task of reference-based image inpainting, an additional reference image is provided to restore a damaged target image to its original state. The advancement of diffusion models, particularly Stable Diffusion, allows for simple formulations in this task. However, existing diffusion-based methods often lack explicit constraints on the correlation between the reference and damaged images, resulting in lower faithfulness to the reference images in the inpainting results.

research.nvidia.com

Constitutional Classifiers: Defending against universal jailbreaks

Large language models (LLMs) are vulnerable to universal jailbreak-prompting strategies that systematically bypass model safeguards and enable users to carry out harmful processes that require many model interactions, like manufacturing illegal substances at scale. To defend against these attacks, this paper introduces Constitutional Classifiers: safeguards trained on synthetic data, generated by prompting LLMs with natural language rules (i.e., a constitution) specifying permitted and restricted content.

arxiv.orgVideo

Silicon Minds, Human Hearts - Amy Boyd

In this episode, we delve into the day-to-day life of cloud advocate Amy Boyd, the intriguing world of AI, and the challenges and opportunities that come with it. Amy shares her passion for data, her journey into AI, and offers valuable advice for those eager to dive into the field. We also discuss the importance of responsible AI and the layers of defense required to ensure its ethical use.

youtube.com

Getting started with Generative AI in Azure

Discover the basics of generative AI, including core models and functionalities. Learn how to utilize these models within the Azure ecosystem, leveraging various services to build your own generative AI applications. Tune in on 4th February at 9AM PST (6PM CET) to join the upcoming Reactor live-streaming or watch later on-demand.

techcommunity.microsoft.comArticles

The Dark Side of AI: 10 Ways Artificial Intelligence Could Be Dangerous

AI is changing the world, but not all of it is good news. While it brings convenience and innovation, there’s a darker side to this technology that we often overlook. From deepfakes and job automation to privacy violations and AI-powered cyberattacks, the risks are growing as fast as the technology itself. This article explores 10 ways AI could be dangerous — and why we need to be more cautious about the future we’re building.

techwhisper.medium.com

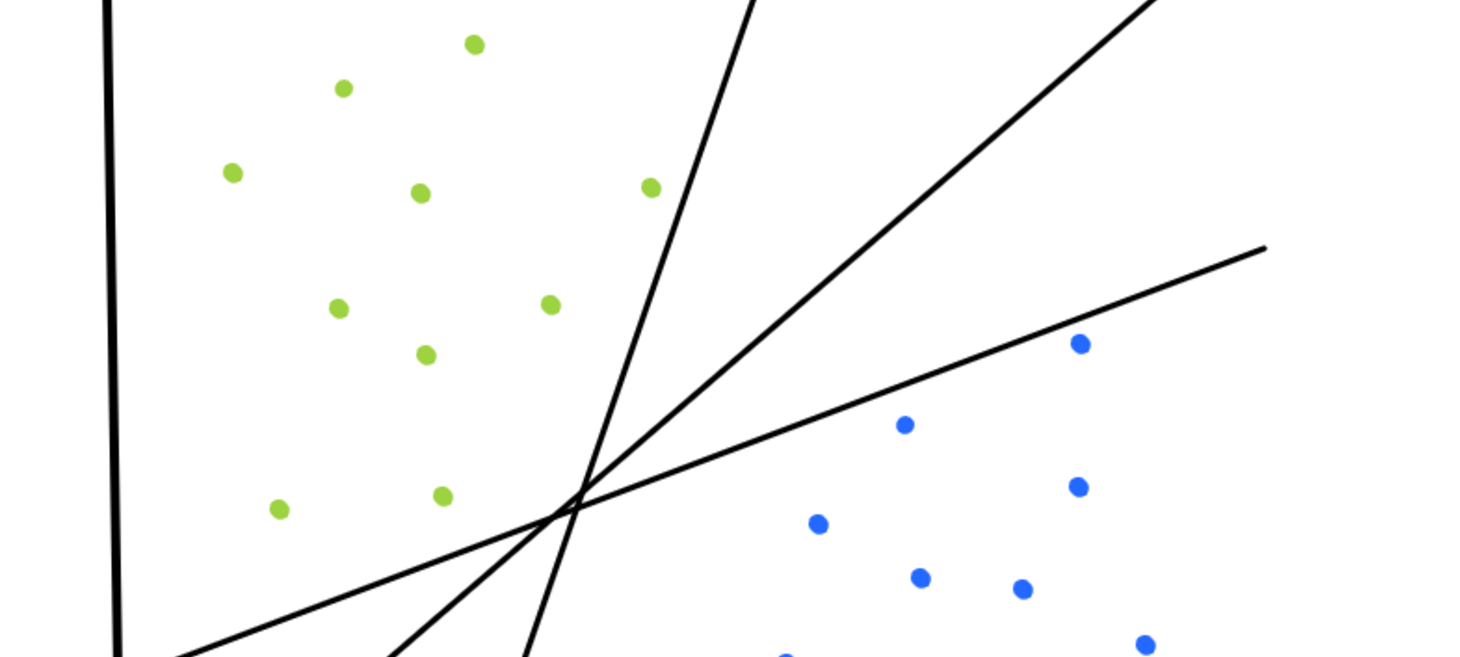

Support Vector Machines: A Progression of Algorithms

The Support Vector Machine (SVM) is a popular learning algorithm used for many classification problems. They are known to be useful out-of-the-box (not much manual configuration required), and they are valuable for applications where knowledge of the class boundaries is more important than knowledge of the class distributions. When working with SVMs, you may hear people mention Support Vector Classifiers (SVC) or Maximal Margin Classifiers (MMC). While these algorithms are all related, there is an important distinction to be made between the three of them.

medium.com

OpenAI launched deep research capabilities for ChatGPT

Last week OpenAI launched deep research in ChatGPT, a new agentic capability that conducts multi-step research on the internet for complex tasks. It accomplishes in tens of minutes what would take a human many hours. Deep research is OpenAI's next agent that can do work for you independently—you give it a prompt, and ChatGPT will find, analyze, and synthesize hundreds of online sources to create a comprehensive report at the level of a research analyst.

openai.com

Running DeepSeek-R1 Locally: A Step-by-Step Guide

Whether you’re a developer, researcher, or AI enthusiast, running DeepSeek-R1 locally gives you full control over data privacy and performance optimization.

medium.comUpcoming Events

Global AI Bootcamp 2025

The Global AI Bootcamp is an annual event that occurs worldwide, where developers and AI enthusiasts can learn about AI through workshops, sessions, and discussions. Local chapters of the Global AI…

globalai.communityCode

Auto-AVSR: Audio-Visual Speech Recognition with Automatic Labels

This repository is an open-sourced framework for speech recognition, with a primary focus on visual speech (lip-reading). It is designed for end-to-end training, aiming to deliver state-of-the-art models and enable reproducibility on audio-visual speech benchmarks.

github.com