Global AI Weekly

Issue number: 90 | Tuesday, March 25, 2025

Highlights

Inside Google’s Two-Year Frenzy to Catch Up With OpenAI

Google, once poised as a leader in AI innovation, found itself scrambling to catch up with OpenAI's advancements in the chatbot race. The company ramped up efforts with intense work schedules, layoffs, and a willingness to take bigger risks by easing certain restrictions. This high-pressure environment highlights Google's determination to reclaim its dominant position in the rapidly evolving world of artificial intelligence.

wired.com

Norwegian files complaint after ChatGPT falsely said he had murdered his children

A Norwegian man, Arve Hjalmar Holmen, is taking action after ChatGPT falsely accused him of a heinous crime in response to a prompt. Despite having no criminal record or accusations against him, the chatbot generated defamatory claims that he had murdered his children. Holmen has filed a formal complaint, raising concerns about the potential harm from AI-generated misinformation.

theguardian.comResearch

Why Do Multi-Agent LLM Systems Fail?

Explore the intriguing reasons behind the challenges faced by multi-agent large language model systems in achieving their potential. This discussion highlights key factors contributing to their limitations and examines where these systems fall short in performance and collaboration. Gain insights into the complexities of developing effective multi-agent LLM frameworks and understand the core issues that need addressing for future innovation.

huggingface.co

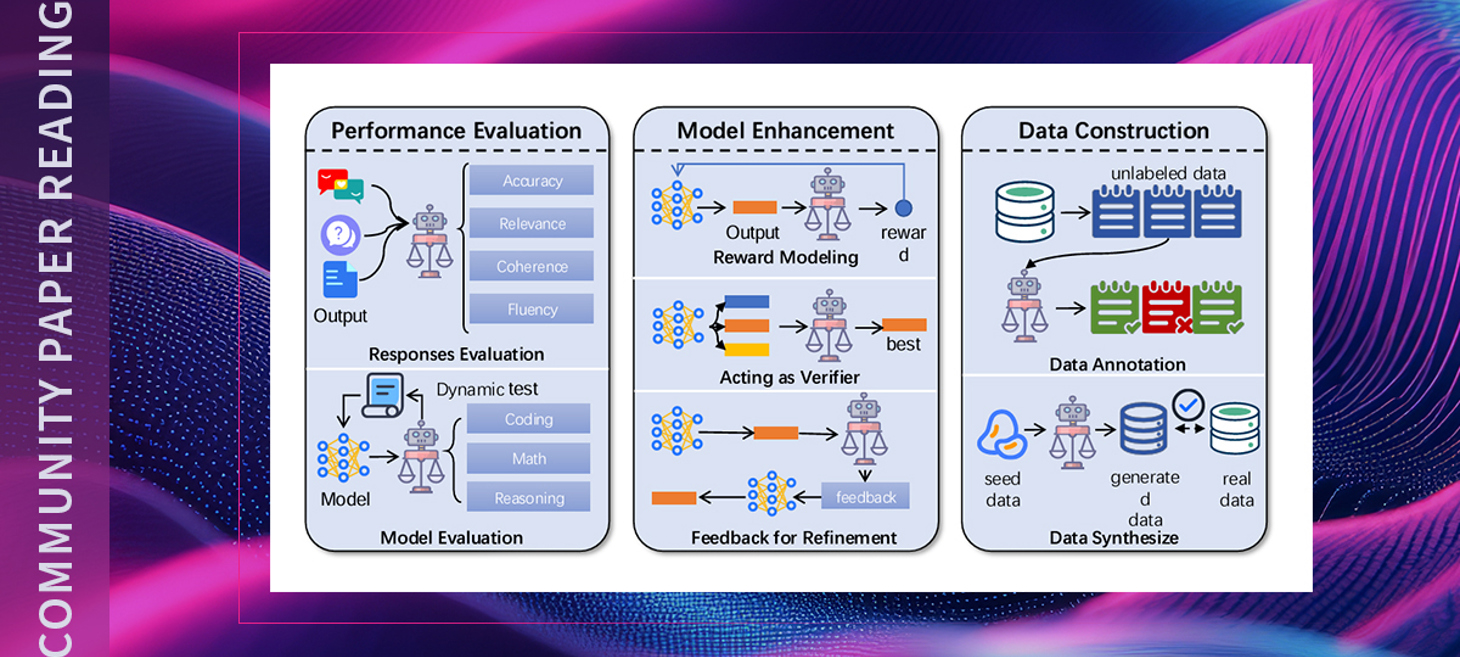

LLMs as Judges: A Comprehensive Survey on LLM-Based Evaluation Methods

This summary highlights the findings from an in-depth study on using large language models (LLMs) as judges in evaluation methods. It covers the limitations and challenges these models face, while also discussing strategies to address and mitigate these issues. The research offers valuable insights into the reliability and effectiveness of LLM-based assessments.

arize.com

Claimify: Extracting high-quality claims from language model outputs

Claimify is a cutting-edge claim-extraction method developed by Microsoft Research that leverages large language models (LLMs) to achieve greater accuracy and depth. It outperforms previous approaches by generating claims that are more precise, thorough, and well-supported. This innovation enhances the reliability and usefulness of information derived from LLM outputs.

microsoft.comVideo

Pamela Fox - Silicon Minds, Human hearts

In this episode of Silicon Minds Human Hearts, Pamela Fox, Python Cloud Advocate at Microsoft, shares her inspiring journey through the worlds of Python, Azure, and AI. She discusses her mission to help Python developers thrive using tools like Azure, VS Code, and GitHub Copilot while reflecting on her experiences at Google Maps API, Coursera, Khan Academy, and UC Berkeley. Pamela also explores the development and deployment of AI applications, ethical AI, content safety, and her favorite AI use cases beyond work. It’s an insightful conversation on technology, education, and the human side of AI.

youtube.com

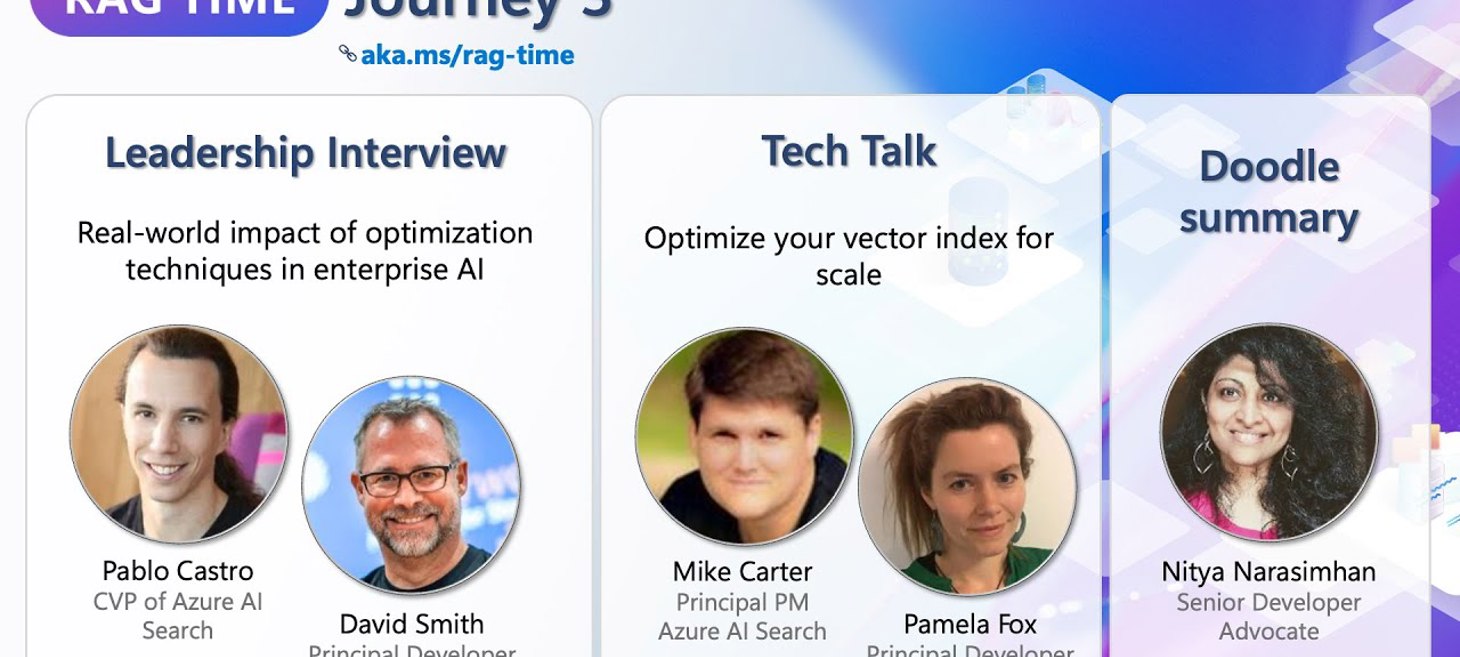

Journey 3: Optimize Your Vector Index for Scale

Pamela Fox and Mike Carter discuss innovative approaches to boosting storage efficiency and scaling your vector index. They share practical tips and explore advanced strategies, including emerging techniques, to enhance performance and handle growth effectively. Perfect for anyone looking to push the boundaries of optimization and scalability.

aka.msArticles

Mistral Small 3.1 (25.03) is now generally available in GitHub Models · GitHub Changelog

Mistral Small 3.1 (25.03) is now accessible in GitHub Models, offering robust capabilities for programming tasks, mathematical problem-solving, engaging dialogue, and analyzing complex documents. This versatile AI model is designed to assist developers and users with a range of applications, making it a valuable tool in various workflows.

github.blog

Self-nomination for reviewing at NeurIPS 2025

The article discusses the process of self-nominating to become a reviewer for NeurIPS 2025. It highlights the significance of reviewers in maintaining the quality of submissions and provides details on how interested individuals can apply. The post also emphasizes the importance of diverse and dedicated contributors in ensuring a fair and robust review process.

blog.neurips.cc

Mastering Prompt Engineering with Functional Testing: A Systematic Guide to Reliable LLM Outputs | Towards Data Science

This guide explores how implementing a systematic approach with algorithmic testing and input/output data fixtures can improve the reliability of prompt engineering for complex AI tasks. By using functional testing methods, it provides a structured way to evaluate and refine prompts for large language models (LLMs), ensuring consistent and dependable outputs. Perfect for those looking to enhance their prompt engineering techniques with practical and effective strategies.

towardsdatascience.comUpcoming Events

Global AI Bootcamp 2025: March 29 - April 4

The Global AI Bootcamp is a worldwide event bringing developers and AI enthusiasts together to explore advancements in Artificial Intelligence. Taking place from March 29 to April 4, 2025, this annual gathering offers opportunities to learn, share insights, and connect with others passionate about AI. Hosted by local communities, the event fosters collaboration and innovation across the globe.

globalai.communityCode

LangGraph: Build Stateful AI Agents in Python – Real Python

LangGraph is a powerful Python library perfect for building stateful, cyclic, and multi-actor applications with Large Language Models (LLMs). This tutorial guides you through the basics of LangGraph with practical examples, showcasing its flexibility and tools for creating custom LLM workflows and agents. Learn how to leverage LangGraph's features to design efficient and adaptable AI solutions.

realpython.com

OpenReg: A Self-Contained PyTorch Out-of-Tree Backend Implementation Using

OpenReg is a standalone example showcasing how to implement a PyTorch out-of-tree backend using the "PrivateUse1" feature of the core framework. It aims to provide a practical guide for backend developers and offers an experimental foundation for trying out novel ideas without modifying PyTorch's core. This resource is designed to support learning and innovation in backend development.

pytorch.orgPodcast

NVIDIA AI Podcast

The NVIDIA AI Podcast brings you engaging 30-minute interviews each week, featuring inspiring individuals showcasing the transformative impact of AI across various fields. From tracking endangered wildlife to decoding starlight from ancient galaxies, and optimizing supply chains, each episode highlights unique perspectives and groundbreaking applications. With nearly 3.4 million listens, this podcast offers a fascinating oral history of AI, perfect for anyone curious about the technology shaping our world.

open.spotify.com